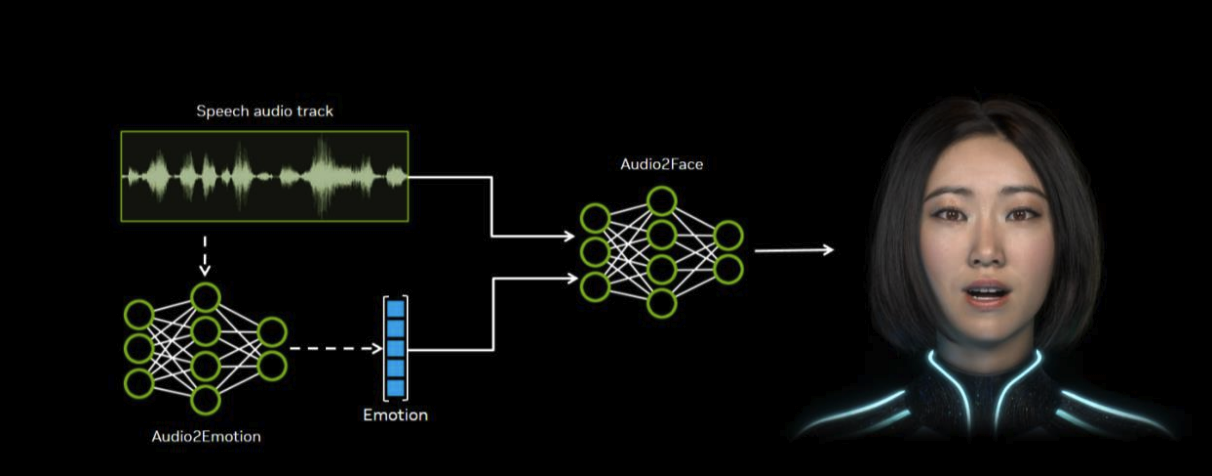

Nvidia has announced that its Audio2Face animation technology is going open source. On paper, that should make it much easier for a wide range of game developers to , including during real-time conversations with gamers.

To recap and according to Nvidia's own words, "by using large language and speech models, generative winner55 ทางเข้า สล็อต AI is creating intelligent 3D avatars that can engage users in natural conversation, from video games to customer service. To make these characters truly lifelike, they need human-like expressions."

The only really obvious giveaway that you're dealing with an early, experimental system is the slight delay in responses, which made for "awkward pauses" in conversation.

In terms of what's being released in open source form, we're talking the Audio2Face SDK, audio plugins for inputting voice streams, training frameworks, sample training data, a library of facial models and a specific Unreal 5 Engine plugin. The open source release also includes Audio2Emotion Models, which can "infer" emotional state from audio in real time.

Nvidia says that among game devs who already use Audio2Face are Codemasters, GSC Games World, NetEase, Perfect World Games, while ISVs include Convai, Inworld AI, Reallusion, Streamlabs, and UneeQ.

Of course, the catch to all this is that Nvidia's broader ACE platform is, inevitably, tied at least to some extent to Nvidia's GPUs, albeit as we understand there aren't any really obvious reasons why ACE features shouldn't run on non-Nvidia GPUs.

But like so many exciting technologies from Nvidia, then, part of the reason why they exist seems to be to push gamers onto Nvidia GPUs, or to keep them there if they are already on Nvidia. Thus Nvidia's default position is to make these features Nvidia-only and leave the likes of AMD playing catch up. It was ever thus.

1. Best overall:

2. Best value:

3. Best budget:

4. Best mid-range:

5. Best high-end:

👉👈